We have lots of updates from the Neely Center! We’re very proud of the inaugural cohort of the Neely Ethics and Technology Fellows program led by Parama Sigurdsen and Carsten Becker. At our culminating event on June 21, 2004, our five fellows presented their research projects on the ethics of mixed reality as it relates to fashion, gaming, healthcare, and advertising (videos forthcoming). The event also featured industry insights from leaders such as Avi Bar-Zeev, April Boyd Noronha, and Sonya Haskins, as attendees explored how to harness the burgeoning $377 billion spatial computing market for good. Learn more about the event here.

Introducing the Neely Purpose-Driven XR Library

During the event, we launched our new Neely Purpose-Driven XR Library as a way to push the mixed reality industry toward creating tech with specific, purpose-driven use cases. This paradigm offers a contrast to creating expensive tech that does cool things but either harms users in the process or fails to produce clear user value. We’ll be discussing this theme as it relates to AI as well but, for now, check out our exciting XR library here (and feel free to reach out if you want to flag some interesting tech in academia or industry that you think should be included).

America in One Room: The Youth Vote

We launched a new project as we continue gathering public input to guide AI design and governance. The Neely Center partnered with four other organizations to host America In One Room: The Youth Vote in DC. This was the first-ever Deliberative Polling event with a nationally representative sample of first-time voters. The team brought nearly 500 rising high school seniors and college freshman from across the political spectrum and all 50 states to deliberate on 53 proposals across major areas relevant to the upcoming election. Close Up Foundation did an incredible job running the event along with the Deliberative Democracy Lab at Stanford and the other three partners: The Generation Lab, Helena, and the Neely Center. We’ll provide a report with the data soon, but in the meantime, you can learn more about Deliberative Polling and one of our partners, James Fishkin, who originated the concept in 1988, here.

Photo credit: Anthony Nsofor

We will build on this event with the launch of our youth index to examine how young people ages 13-17 are experiencing social media and AI as well as with additional in-person and online events to foster deliberation around key proposals on how to design and govern powerful AI systems. In the meantime, check out the additional project updates below.

Sincerely,

Nate Fast, Executive Director

AI Policy Gathering in DC

While in DC, the Neely Center hosted an AI Policy Happy Hour in the rooftop lounge of USC’s new DC Campus (co-organized by Nate Fast and Chloe Autio, CEO of Autio Strategies). AI policy leaders from academia, tech companies, governmental agencies, and nonprofits gathered to discuss how to increase societal input into AI systems and how to protect democratic processes as we head into the election. Below Jim Fishkin, Director of the Deliberative Democracy Lab, explains the tool of Deliberative Polling and how it can be used to assess a populations’ opinions on key issues under good conditions (i.e., access to briefing on the issues, deliberation with a representative group, and the opportunity to ask questions from diverse experts). Mia Charity, President of Close Up Foundation and Alice Siu, Associate Director of the Deliberative Democracy Lab also offered remarks at the event.

The Surgeon General Has New Social Media Recommendations

In a new piece for The Boston Globe, Managing Director of the Neely Center for Ethical Leadership and Decision Making, Ravi Iyer, shares insights on how we can better protect young people online. He recently collaborated with policy leaders in Minnesota to pass groundbreaking legislation that will “force social media platforms like Facebook, Instagram, TikTok, and Snapchat to reveal the results of their user experiments, disclose how their algorithms prioritize what users see on their feeds, explain how they treat abusive actors, and reveal how much time people spend on these platforms, including how often people are receiving notifications.”

“Minnesota’s new law is an important step to begin holding social media companies accountable, and serves as a call to action to other states” argues Iyer in the op-ed.

Neely Center Design Code in Tech Policy Press Article

In a recent Tech Policy Press article discussing current design policy with a focus on the California Age Appropriate Design Code, the authors talked about the Neely Center’s Design Code for Social Media as well as the recent Minnesota legislation that builds on our design code. Through the Neely Center, Ravi worked with stakeholders to propose his own design code reflecting consensus on best practices across the industry. He explained the core value of the “upstream” design and how it can respond to challenges like hate speech. “If you attack hate speech by identifying it and demoting it,” he explained, “you will miss out on, for example, ‘fear speech,’ which still creates a lot of hate. It can be as or more harmful than hate speech. You can’t define all the ways people hate or mislead one another… so you want to discourage all the harmful content, not just what you can identify. This affects the whole ecosystem: publishers see what is and isn’t rewarding.”

National Academies Workshops on AI Risk Management

Neely Center Director, Nate Fast was invited to join a committee organized by the National Academies of Sciences, Engineering, and Medicine, sponsored by the National Institute of Standards and Technology (NIST). The committee produced four engaging workshops diving into NIST’s AI Risk Management Framework — topics included how to operationalize the framework as well as how to extend and expand it in important ways. Fast helped lead Workshops 1 and 4, speaking on the topic of how to broaden stakeholder participation. You can watch the workshops here (the final workshop happened live in DC).

Workshop 1 – Ensuring Broad Stakeholder Participation (June 11th)

Workshop 2 – Evaluation, Testing, and Oversight (June 20th)

Workshop 3 – Safety in Context (June 26th)

Workshop 4 – Designing Paths Forward in AI Risk Management (July 2nd)

Photo by Ben Shneiderman

The AI That Could Heal a Divided Internet

In a recent article in Time, Jigsaw, a Google subsidiary, revealed a new set of AI tools that can score posts based on the likelihood that they classify as good content: Is a post nuanced? Does it contain evidence-based reasoning? Does it share a personal story or foster human compassion? By returning a numerical score (from 0 to 1) representing the likelihood of a post containing each of those virtues and others, these new AI tools could allow the designers of online spaces to rank posts in a new way. Instead of posts that receive the most likes or comments rising to the top, platforms could, in an effort to foster a better community, choose to put the most nuanced comments, or the most compassionate ones, first. Jigsaw’s new AI tools could result in a paradigm shift for social media. Elevating more desirable forms of online speech could create new incentives for more positive online and possibly offline social norms. If a platform amplifies toxic comments, “then people get the signal they should do terrible things,” stated Ravi Iyer, a technologist and the Managing Director of the Neely Center. He went on to add, “If all the top comments are informative and useful, then people follow the norm and create more informative and useful comments.”

Do People Still Have Meaningful Connections on Social Media?

In a recent Substack post, Matt Motyl, a Senior Advisor at the Neely Center, analyzes new data from the Neely Social Media Index to determine whether the rate of users finding meaningful connections on social platforms has increased, decreased, or remained the same over the past 15 months. The findings are not straightforward. Looking at the aggregate data at a glance, it may seem that the overall rate of experiencing meaningful connections on any social platform has been stable over the past 15 months. However, breaking it down by demographic and social identities gives us a more nuanced picture. Less educated people became significantly less likely to report meaningful connections online (-12.1%), while more educated individuals were significantly more likely to report meaningful connections online (+4.4%). These significant diverging trends led to the gap between the least and most educated groups expanding from 27.8% to 34.2%. Similarly, the lowest income users became somewhat less likely to report meaningful connections online (-2.5%), while the highest income users became somewhat more likely to report meaningful connections online (+3.5%). Examining specific platforms, we see that FaceTime and text messaging, both direct communication-oriented services, exhibited significant increases in the rate of users reporting meaningful connections over the past 15 months. Snapchat and LinkedIn also exhibited significant increases in the rate at which their users report experiencing meaningful connections with others. WhatsApp also exhibited an increase in this rate, but the change was within the margin of error and thus not statistically significant. Conversely, we see non-significant decreases of at least 1% in the rate at which users on Nextdoor, X (formerly Twitter), and Reddit report meaningful connections.

Neely Design Code for Social Media Cited in Technology in Peacebuilding Conversation

In an interview with Adam Farquhar, Research and Data Officer with PeaceRep, Caleb Gichuhi, Africa Lead at Build Up, discussed the evolving relationship between peacebuilders and technologists. During the interview, they reflected on a range of topics, including Caleb’s journey from developing medical apps to SMS technology in peacebuilding initiatives, the concept of “Safety by Design” - referencing the USC Neely Center Design Code - inclusivity in tech, and the use of AI in peacebuilding.

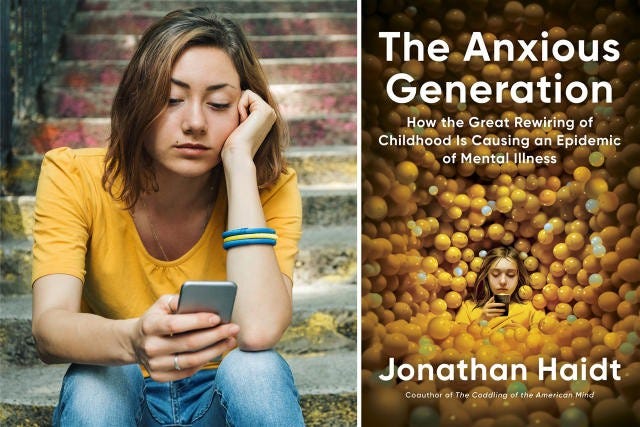

Neely Center Partners with The Anxious Generation

The Neely Center is committed to ensuring that technology is a positive force for our youth. The Anxious Generation, a recent bestseller authored by our longtime collaborator Jonathan Haidt, explores the impact of technology on youth. We are pleased to say that our managing director, Ravi Iyer, contributed to a chapter in The Anxious Generation that addresses “what governments and tech companies can do now”.

In a recent Substack article, Ravi expands on specific ideas from the Design Code for Social Media - particularly how device-based verification could help protect children. “The current system for protecting children online does not work... The providers of operating systems, which is a market that Apple, Google, and Microsoft dominate, could help... Device-based Age Verification could provide the control that parents want without the complexity that prevents the widespread use of current parental settings… Device-based Age Verification would allow users to designate the user of a device as needing added protections across all applications used on that device.”

The article and proposals within are already being considered by legislators across jurisdictions as the Neely Center continues to lead on design-based tech policy.

Social Media & Society Symposium on India

On April 8, 2024, Dr. Jimmy Narang, a postdoctoral scholar affiliated with Neely, organized a workshop that aimed to bridge the gap between academia and practitioners in understanding online misinformation. The workshop was part of the Social Media and Society in India Conference 2024 hosted by the University of Michigan. In the first part, the presenters reviewed the current scientific consensus on the subject, identifying areas where evidence was clear, mixed, or absent. In the second part, invited audience members were asked to share on-the-ground insights and areas they felt were underexplored in the literature and of special relevance to the Indian context.

Designing Against the Rise of Synthetic Content

In this Atlantic article, Nathaniel Lubin, who collaborates with us on a variety of initiatives, discusses what to do about the "junkification" of the internet due to the rise of AI-generated synthetic content. In the article, he calls for platforms that are designed differently, so as not to set bad ecosystem incentives that foster junkification - specifically citing design changes from the Neely Center Design Code for Social Media. He also advocates for public health tools to assess platform risk and product experiment transparency.

Workshops on Tech Incentives and Comment Section Design

In collaboration with the Council on Technology and Social Cohesion, the Neely Center co-hosted events on May 1st and 2nd, 2024, at the Internet Archive in San Francisco. The May 1st event brought together funders, investors, and founders to discuss how prosocial design could be incentivized financially. The May 2nd event brought together researchers and practitioners to examine evidence-based ideas for improving online comment spaces. Ravi Iyer, the Managing Director of the Neely Center, moderated a panel on fixing algorithmic feeds that included Jay Baxter from Twitter and Matt Motyl, who works at the Neely Center as well as the Integrity Institute. Stay tuned for the talks from the May 2nd event which will be posted publicly in the near future.

Neely Center at Association for Psychological Science Annual Meeting

At the 2024 Association for Psychological Science Annual Meeting, our director, Nate Fast, and managing director, Ravi Iyer, delivered insightful presentations. Nate contributed to a panel discussing the psychological impact of AI, while Ravi explored opportunities for psychological scientists in the industry. Their participation highlighted the Neely Center's commitment to tracking technology’s growing impact on psychological science.

Tech4Good AI Symposium

On April 17, 2024, ShiftSC, a USC student organization dedicated to responsible technology, organized the 2024 Tech4Good AI Symposium at the USC Bovard Auditorium. The Neely Center is proud to sponsor ShiftSC, whose mission aligns closely with the Center’s. This AI Symposium explored the ethical and social impacts of AI and engaged with industry leaders from Google, OpenAI, Juniper Networks, and more. The event featured a keynote speaker, a panel discussion, and an exclusive reception to network with the guest speakers. We applaud ShiftSC and the student organizers for continuing to host impactful events such as this.

Shift SC VR Pitch Competition

On Saturday, March 30, 2024, ShiftSC, a campus student organization sponsored by the Neely Center, organized a VR pitch competition titled RealityShift. It was a one-day competition where students teamed up to compete against other teams to pitch an extended reality experience (VR/MR/AR) that addresses a social problem or improves the way something is traditionally done. The top three teams were awarded prizes. The goal of this competition was to get students thinking of ways to implement emerging technologies in ways that benefit society and consider the potential ethical implications. Join us in congratulating ShiftSC for organizing another successful event!